一、算法簡要

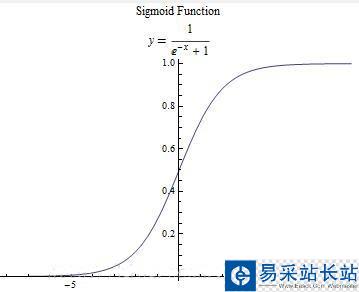

我們希望有這么一種函數:接受輸入然后預測出類別,這樣用于分類。這里,用到了數學中的sigmoid函數,sigmoid函數的具體表達式和函數圖象如下:

可以較為清楚的看到,當輸入的x小于0時,函數值<0.5,將分類預測為0;當輸入的x大于0時,函數值>0.5,將分類預測為1。

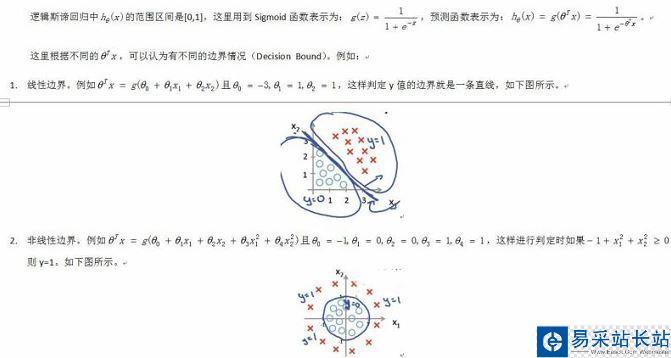

1.1 預測函數的表示

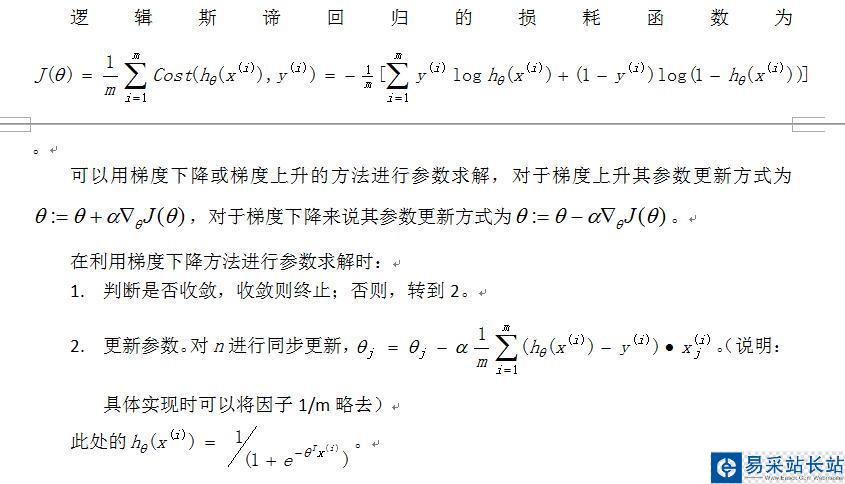

1.2參數的求解

二、代碼實現

函數sigmoid計算相應的函數值;gradAscent實現的batch-梯度上升,意思就是在每次迭代中所有數據集都考慮到了;而stoGradAscent0中,則是將數據集中的示例都比那里了一遍,復雜度大大降低;stoGradAscent1則是對隨機梯度上升的改進,具體變化是alpha每次變化的頻率是變化的,而且每次更新參數用到的示例都是隨機選取的。

from numpy import * import matplotlib.pyplot as plt def loadDataSet(): dataMat = [] labelMat = [] fr = open('testSet.txt') for line in fr.readlines(): lineArr = line.strip('/n').split('/t') dataMat.append([1.0, float(lineArr[0]), float(lineArr[1])]) labelMat.append(int(lineArr[2])) fr.close() return dataMat, labelMat def sigmoid(inX): return 1.0/(1+exp(-inX)) def gradAscent(dataMatIn, classLabels): dataMatrix = mat(dataMatIn) labelMat = mat(classLabels).transpose() m,n=shape(dataMatrix) alpha = 0.001 maxCycles = 500 weights = ones((n,1)) errors=[] for k in range(maxCycles): h = sigmoid(dataMatrix*weights) error = labelMat - h errors.append(sum(error)) weights = weights + alpha*dataMatrix.transpose()*error return weights, errors def stoGradAscent0(dataMatIn, classLabels): m,n=shape(dataMatIn) alpha = 0.01 weights = ones(n) for i in range(m): h = sigmoid(sum(dataMatIn[i]*weights)) error = classLabels[i] - h weights = weights + alpha*error*dataMatIn[i] return weights def stoGradAscent1(dataMatrix, classLabels, numIter = 150): m,n=shape(dataMatrix) weights = ones(n) for j in range(numIter): dataIndex=range(m) for i in range(m): alpha= 4/(1.0+j+i)+0.01 randIndex = int(random.uniform(0,len(dataIndex))) h = sigmoid(sum(dataMatrix[randIndex]*weights)) error = classLabels[randIndex]-h weights=weights+alpha*error*dataMatrix[randIndex] del(dataIndex[randIndex]) return weights def plotError(errs): k = len(errs) x = range(1,k+1) plt.plot(x,errs,'g--') plt.show() def plotBestFit(wei): weights = wei.getA() dataMat, labelMat = loadDataSet() dataArr = array(dataMat) n = shape(dataArr)[0] xcord1=[] ycord1=[] xcord2=[] ycord2=[] for i in range(n): if int(labelMat[i])==1: xcord1.append(dataArr[i,1]) ycord1.append(dataArr[i,2]) else: xcord2.append(dataArr[i,1]) ycord2.append(dataArr[i,2]) fig = plt.figure() ax = fig.add_subplot(111) ax.scatter(xcord1, ycord1, s=30, c='red', marker='s') ax.scatter(xcord2, ycord2, s=30, c='green') x = arange(-3.0,3.0,0.1) y=(-weights[0]-weights[1]*x)/weights[2] ax.plot(x,y) plt.xlabel('x1') plt.ylabel('x2') plt.show() def classifyVector(inX, weights): prob = sigmoid(sum(inX*weights)) if prob>0.5: return 1.0 else: return 0 def colicTest(ftr, fte, numIter): frTrain = open(ftr) frTest = open(fte) trainingSet=[] trainingLabels=[] for line in frTrain.readlines(): currLine = line.strip('/n').split('/t') lineArr=[] for i in range(21): lineArr.append(float(currLine[i])) trainingSet.append(lineArr) trainingLabels.append(float(currLine[21])) frTrain.close() trainWeights = stoGradAscent1(array(trainingSet),trainingLabels, numIter) errorCount = 0 numTestVec = 0.0 for line in frTest.readlines(): numTestVec += 1.0 currLine = line.strip('/n').split('/t') lineArr=[] for i in range(21): lineArr.append(float(currLine[i])) if int(classifyVector(array(lineArr), trainWeights))!=int(currLine[21]): errorCount += 1 frTest.close() errorRate = (float(errorCount))/numTestVec return errorRate def multiTest(ftr, fte, numT, numIter): errors=[] for k in range(numT): error = colicTest(ftr, fte, numIter) errors.append(error) print "There "+str(len(errors))+" test with "+str(numIter)+" interations in all!" for i in range(numT): print "The "+str(i+1)+"th"+" testError is:"+str(errors[i]) print "Average testError: ", float(sum(errors))/len(errors) ''''' data, labels = loadDataSet() weights0 = stoGradAscent0(array(data), labels) weights,errors = gradAscent(data, labels) weights1= stoGradAscent1(array(data), labels, 500) print weights plotBestFit(weights) print weights0 weights00 = [] for w in weights0: weights00.append([w]) plotBestFit(mat(weights00)) print weights1 weights11=[] for w in weights1: weights11.append([w]) plotBestFit(mat(weights11)) ''' multiTest(r"horseColicTraining.txt",r"horseColicTest.txt",10,500)

新聞熱點

疑難解答