獲取 PCM 數據

處理 PCM 數據

Float32 轉 Int16

ArrayBuffer 轉 Base64

PCM 文件播放

重采樣

PCM 轉 MP3

PCM 轉 WAV

短時能量計算

Web Worker優化性能

音頻存儲(IndexedDB)

WebView 開啟 WebRTC

獲取 PCM 數據

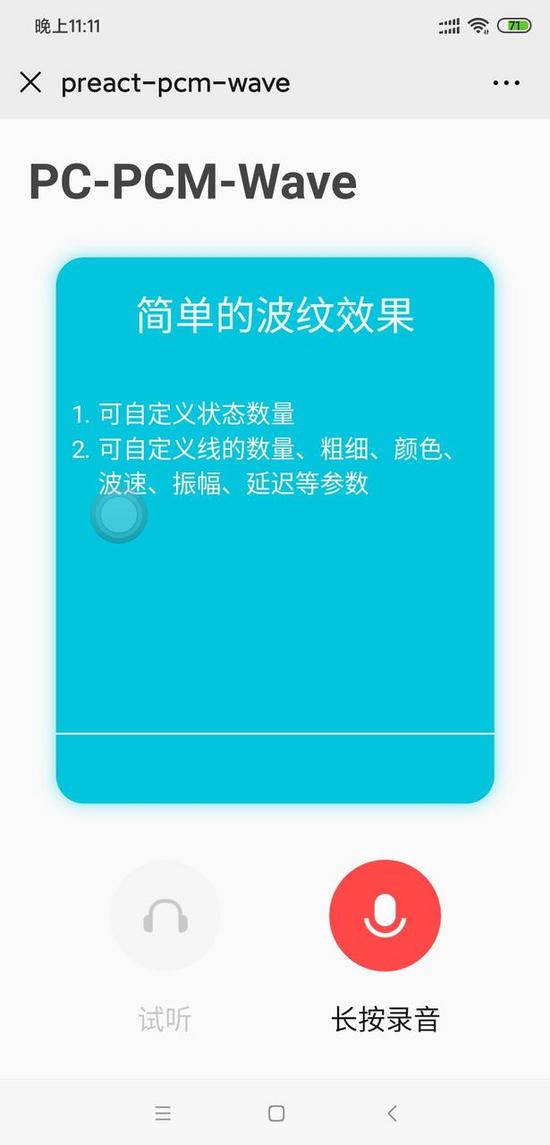

查看 DEMO

https://github.com/deepkolos/pc-pcm-wave

樣例代碼:

const mediaStream = await window.navigator.mediaDevices.getUserMedia({ audio: { // sampleRate: 44100, // 采樣率 不生效需要手動重采樣 channelCount: 1, // 聲道 // echoCancellation: true, // noiseSuppression: true, // 降噪 實測效果不錯 },})const audioContext = new window.AudioContext()const inputSampleRate = audioContext.sampleRateconst mediaNode = audioContext.createMediaStreamSource(mediaStream)if (!audioContext.createScriptProcessor) { audioContext.createScriptProcessor = audioContext.createJavaScriptNode}// 創建一個jsNodeconst jsNode = audioContext.createScriptProcessor(4096, 1, 1)jsNode.connect(audioContext.destination)jsNode.onaudioprocess = (e) => { // e.inputBuffer.getChannelData(0) (left) // 雙通道通過e.inputBuffer.getChannelData(1)獲取 (right)}mediaNode.connect(jsNode)簡要流程如下:

start=>start: 開始getUserMedia=>operation: 獲取MediaStreamaudioContext=>operation: 創建AudioContextscriptNode=>operation: 創建scriptNode并關聯AudioContextonaudioprocess=>operation: 設置onaudioprocess并處理數據end=>end: 結束start->getUserMedia->audioContext->scriptNode->onaudioprocess->end

停止錄制只需要把 audioContext 掛在的 node 卸載即可,然后把存儲的每一幀數據合并即可產出 PCM 數據

jsNode.disconnect()mediaNode.disconnect()jsNode.onaudioprocess = null

PCM 數據處理

通過 WebRTC 獲取的 PCM 數據格式是 Float32 的, 如果是雙通道錄音的話, 還需要增加合并通道

const leftDataList = [];const rightDataList = [];function onAudioProcess(event) { // 一幀的音頻PCM數據 let audioBuffer = event.inputBuffer; leftDataList.push(audioBuffer.getChannelData(0).slice(0)); rightDataList.push(audioBuffer.getChannelData(1).slice(0));}// 交叉合并左右聲道的數據function interleaveLeftAndRight(left, right) { let totalLength = left.length + right.length; let data = new Float32Array(totalLength); for (let i = 0; i < left.length; i++) { let k = i * 2; data[k] = left[i]; data[k + 1] = right[i]; } return data;}Float32 轉 Int16

const float32 = new Float32Array(1)const int16 = Int16Array.from( float32.map(x => (x > 0 ? x * 0x7fff : x * 0x8000)),)

arrayBuffer 轉 Base64

注意: 在瀏覽器上有個 btoa() 函數也是可以轉換為 Base64 但是輸入參數必須為字符串, 如果傳遞 buffer 參數會先被 toString() 然后再 Base64 , 使用 ffplay 播放反序列化的 Base64 , 會比較刺耳

使用 base64-arraybuffer 即可完成

import { encode } from 'base64-arraybuffer'const float32 = new Float32Array(1)const int16 = Int16Array.from( float32.map(x => (x > 0 ? x * 0x7fff : x * 0x8000)),)console.log(encode(int16.buffer))驗證 Base64 是否正確, 可以在 node 下把產出的 Base64 轉換為 Int16 的 PCM 文件, 然后使用 FFPlay 播放, 看看音頻是否正常播放

PCM 文件播放

# 單通道 采樣率:16000 Int16ffplay -f s16le -ar 16k -ac 1 test.pcm# 雙通道 采樣率:48000 Float32ffplay -f f32le -ar 48000 -ac 2 test.pcm

重采樣/調整采樣率

雖然 getUserMedia 參數可設置采樣率, 但是在最新Chrome也不生效, 所以需要手動做個重采樣

const mediaStream = await window.navigator.mediaDevices.getUserMedia({ audio: { // sampleRate: 44100, // 采樣率 設置不生效 channelCount: 1, // 聲道 // echoCancellation: true, // 減低回音 // noiseSuppression: true, // 降噪, 實測效果不錯 },})使用 wave-resampler 即可完成

import { resample } from 'wave-resampler'const inputSampleRate = 44100const outputSampleRate = 16000const resampledBuffers = resample( // 需要onAudioProcess每一幀的buffer合并后的數組 mergeArray(audioBuffers), inputSampleRate, outputSampleRate,)PCM 轉 MP3

import { Mp3Encoder } from 'lamejs'let mp3bufconst mp3Data = []const sampleBlockSize = 576 * 10 // 工作緩存區, 576的倍數const mp3Encoder = new Mp3Encoder(1, outputSampleRate, kbps)const samples = float32ToInt16( audioBuffers, inputSampleRate, outputSampleRate,)let remaining = samples.lengthfor (let i = 0; remaining >= 0; i += sampleBlockSize) { const left = samples.subarray(i, i + sampleBlockSize) mp3buf = mp3Encoder.encodeBuffer(left) mp3Data.push(new Int8Array(mp3buf)) remaining -= sampleBlockSize}mp3Data.push(new Int8Array(mp3Encoder.flush()))console.log(mp3Data)// 工具函數function float32ToInt16(audioBuffers, inputSampleRate, outputSampleRate) { const float32 = resample( // 需要onAudioProcess每一幀的buffer合并后的數組 mergeArray(audioBuffers), inputSampleRate, outputSampleRate, ) const int16 = Int16Array.from( float32.map(x => (x > 0 ? x * 0x7fff : x * 0x8000)), ) return int16}使用 lamejs 即可, 但是體積較大(160+KB), 如果沒有存儲需求可使用 WAV 格式

> ls -alh-rwxrwxrwx 1 root root 95K 4月 22 12:45 12s.mp3*-rwxrwxrwx 1 root root 1.1M 4月 22 12:44 12s.wav*-rwxrwxrwx 1 root root 235K 4月 22 12:41 30s.mp3*-rwxrwxrwx 1 root root 2.6M 4月 22 12:40 30s.wav*-rwxrwxrwx 1 root root 63K 4月 22 12:49 8s.mp3*-rwxrwxrwx 1 root root 689K 4月 22 12:48 8s.wav*

PCM 轉 WAV

function mergeArray(list) { const length = list.length * list[0].length const data = new Float32Array(length) let offset = 0 for (let i = 0; i < list.length; i++) { data.set(list[i], offset) offset += list[i].length } return data}function writeUTFBytes(view, offset, string) { var lng = string.length for (let i = 0; i < lng; i++) { view.setUint8(offset + i, string.charCodeAt(i)) }}function createWavBuffer(audioData, sampleRate = 44100, channels = 1) { const WAV_HEAD_SIZE = 44 const buffer = new ArrayBuffer(audioData.length * 2 + WAV_HEAD_SIZE) // 需要用一個view來操控buffer const view = new DataView(buffer) // 寫入wav頭部信息 // RIFF chunk descriptor/identifier writeUTFBytes(view, 0, 'RIFF') // RIFF chunk length view.setUint32(4, 44 + audioData.length * 2, true) // RIFF type writeUTFBytes(view, 8, 'WAVE') // format chunk identifier // FMT sub-chunk writeUTFBytes(view, 12, 'fmt') // format chunk length view.setUint32(16, 16, true) // sample format (raw) view.setUint16(20, 1, true) // stereo (2 channels) view.setUint16(22, channels, true) // sample rate view.setUint32(24, sampleRate, true) // byte rate (sample rate * block align) view.setUint32(28, sampleRate * 2, true) // block align (channel count * bytes per sample) view.setUint16(32, channels * 2, true) // bits per sample view.setUint16(34, 16, true) // data sub-chunk // data chunk identifier writeUTFBytes(view, 36, 'data') // data chunk length view.setUint32(40, audioData.length * 2, true) // 寫入PCM數據 let index = 44 const volume = 1 const { length } = audioData for (let i = 0; i < length; i++) { view.setInt16(index, audioData[i] * (0x7fff * volume), true) index += 2 } return buffer}// 需要onAudioProcess每一幀的buffer合并后的數組createWavBuffer(mergeArray(audioBuffers))WAV 基本上是 PCM 加上一些音頻信息

簡單的短時能量計算

function shortTimeEnergy(audioData) { let sum = 0 const energy = [] const { length } = audioData for (let i = 0; i < length; i++) { sum += audioData[i] ** 2 if ((i + 1) % 256 === 0) { energy.push(sum) sum = 0 } else if (i === length - 1) { energy.push(sum) } } return energy}由于計算結果有會因設備的錄音增益差異較大, 計算出數據也較大, 所以使用比值簡單區分人聲和噪音

查看 DEMO

const NoiseVoiceWatershedWave = 2.3const energy = shortTimeEnergy(e.inputBuffer.getChannelData(0).slice(0))const avg = energy.reduce((a, b) => a + b) / energy.lengthconst nextState = Math.max(...energy) / avg > NoiseVoiceWatershedWave ? 'voice' : 'noise'

Web Worker 優化性能

音頻數據數據量較大, 所以可以使用 Web Worker 進行優化, 不卡 UI 線程

在 Webpack 項目里 Web Worker 比較簡單, 安裝 worker-loader 即可

preact.config.js

export default (config, env, helpers) => { config.module.rules.push({ test: //.worker/.js$/, use: { loader: 'worker-loader', options: { inline: true } }, })}recorder.worker.js

self.addEventListener('message', event => { console.log(event.data) // 轉MP3/轉Base64/轉WAV等等 const output = '' self.postMessage(output)}使用 Worker

async function toMP3(audioBuffers, inputSampleRate, outputSampleRate = 16000) { const { default: Worker } = await import('./recorder.worker') const worker = new Worker() // 簡單使用, 項目可以在recorder實例化的時候創建worker實例, 有并法需求可多個實例 return new Promise(resolve => { worker.postMessage({ audioBuffers: audioBuffers, inputSampleRate: inputSampleRate, outputSampleRate: outputSampleRate, type: 'mp3', }) worker.onmessage = event => resolve(event.data) })}音頻的存儲

瀏覽器持久化儲存的地方有 LocalStorage 和 IndexedDB , 其中 LocalStorage 較為常用, 但是只能儲存字符串, 而 IndexedDB 可直接儲存 Blob , 所以優先選擇 IndexedDB ,使用 LocalStorage 則需要轉 Base64 體積將會更大

所以為了避免占用用戶太多空間, 所以選擇MP3格式進行存儲

> ls -alh-rwxrwxrwx 1 root root 95K 4月 22 12:45 12s.mp3*-rwxrwxrwx 1 root root 1.1M 4月 22 12:44 12s.wav*-rwxrwxrwx 1 root root 235K 4月 22 12:41 30s.mp3*-rwxrwxrwx 1 root root 2.6M 4月 22 12:40 30s.wav*-rwxrwxrwx 1 root root 63K 4月 22 12:49 8s.mp3*-rwxrwxrwx 1 root root 689K 4月 22 12:48 8s.wav*

IndexedDB 簡單封裝如下, 熟悉后臺的同學可以找個 ORM 庫方便數據讀寫

const indexedDB = window.indexedDB || window.webkitIndexedDB || window.mozIndexedDB || window.OIndexedDB || window.msIndexedDBconst IDBTransaction = window.IDBTransaction || window.webkitIDBTransaction || window.OIDBTransaction || window.msIDBTransactionconst readWriteMode = typeof IDBTransaction.READ_WRITE === 'undefined' ? 'readwrite' : IDBTransaction.READ_WRITEconst dbVersion = 1const storeDefault = 'mp3'let dbLinkfunction initDB(store) { return new Promise((resolve, reject) => { if (dbLink) resolve(dbLink) // Create/open database const request = indexedDB.open('audio', dbVersion) request.onsuccess = event => { const db = request.result db.onerror = event => { reject(event) } if (db.version === dbVersion) resolve(db) } request.onerror = event => { reject(event) } // For future use. Currently only in latest Firefox versions request.onupgradeneeded = event => { dbLink = event.target.result const { transaction } = event.target if (!dbLink.objectStoreNames.contains(store)) { dbLink.createObjectStore(store) } transaction.oncomplete = event => { // Now store is available to be populated resolve(dbLink) } } })}export const writeIDB = async (name, blob, store = storeDefault) => { const db = await initDB(store) const transaction = db.transaction([store], readWriteMode) const objStore = transaction.objectStore(store) return new Promise((resolve, reject) => { const request = objStore.put(blob, name) request.onsuccess = event => resolve(event) request.onerror = event => reject(event) transaction.commit && transaction.commit() })}export const readIDB = async (name, store = storeDefault) => { const db = await initDB(store) const transaction = db.transaction([store], readWriteMode) const objStore = transaction.objectStore(store) return new Promise((resolve, reject) => { const request = objStore.get(name) request.onsuccess = event => resolve(event.target.result) request.onerror = event => reject(event) transaction.commit && transaction.commit() })}export const clearIDB = async (store = storeDefault) => { const db = await initDB(store) const transaction = db.transaction([store], readWriteMode) const objStore = transaction.objectStore(store) return new Promise((resolve, reject) => { const request = objStore.clear() request.onsuccess = event => resolve(event) request.onerror = event => reject(event) transaction.commit && transaction.commit() })}WebView 開啟 WebRTC

見 WebView WebRTC not working

webView.setWebChromeClient(new WebChromeClient(){ @TargetApi(Build.VERSION_CODES.LOLLIPOP) @Override public void onPermissionRequest(final PermissionRequest request) { request.grant(request.getResources()); }});到此這篇關于HTML5錄音實踐總結(Preact)的文章就介紹到這了,更多相關html5錄音內容請搜索武林網以前的文章或繼續瀏覽下面的相關文章,希望大家以后多多支持武林網!

新聞熱點

疑難解答